The business information server: generic data checking using PostgreSQL, PostgREST, and Semgrep

For discussion and comments please contact me.

Table of contents

- 1. Introduction

- 2. Overview

- 3. Proof of concept installation and configuration

- 4. Generalizing the concept of checking JSON descriptions of your data

- 5. Summary and outlook

1. Introduction

One thing that all modern organizations have in common, is that they rely on a lot of data. This includes business information like financial records, customer data, or inventory data, but also technical information like server settings or role configurations. As companies grow, it becomes increasingly hard to keep track of all these different aspects. Typically, the data is spread across multiple systems, databases, and files, making it difficult to get a complete picture.

In this article, I will discuss how to create a unified JSON REST-API to funnel data in a central place and how to leverage a rule-checking engine to check that the data is correct according to customizable rules. I will use standard software for this: the database system PostgreSQL (with extensions), the REST-Server PostgREST, and the rule-checking engine Semgrep.

2. Overview

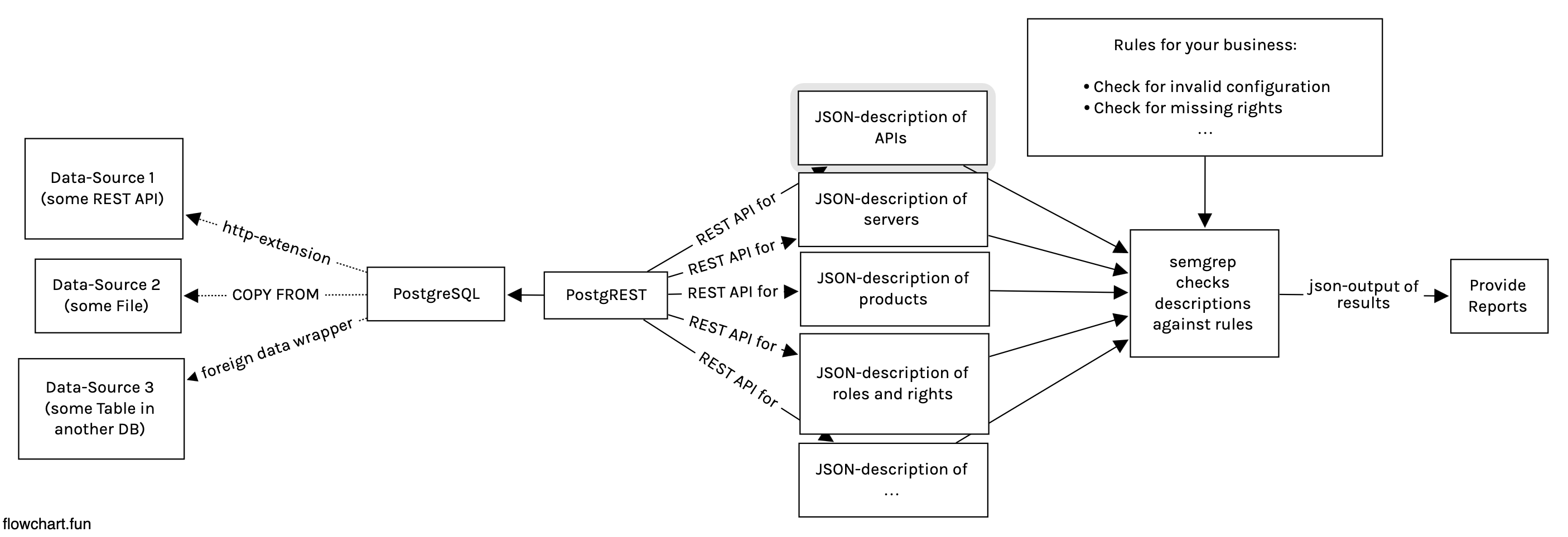

The core idea of my approach is to leverage the versatile features of the database PostgreSQL to combine data from various sources, like APIs, files, or legacy databases into a unified schema. This schema can then be presented and served as a JSON REST-API using PostgREST. The resulting "business information server" is then accessed by a script or build pipeline and Semgrep is used to check the unified descriptions, ensuring their quality and consistency according to specifications.

A diagram of the finished pipeline:

The resulting report is in JSON format as well and can be further processed to create documents or alert stakeholders of any issues that need to be addressed.

This solution has several benefits:

- It allows you to create a complete picture of your data by aggregating it from multiple sources.

- It provides a standard way of describing different aspects of your business in JSON, which can be easily consumed by other tools and systems.

- It allows you to create specifications tailored to your organization, and check your data for compliance.

- My approach is incremental and there is no vendor lock-in. You can start gradually using only some APIs and rules and then iteratively improve and widen the checks.

3. Proof of concept installation and configuration

3.1 Overview

For the setup of the environment, I will assume that you already have PostgreSQL, PostgREST, and Semgrep installed on your machine. You can download the latest versions from the official websites or install them using your package manager.

The proof-of-concept consists of these steps:

- Create a new PostgreSQL database

- Use the pgsql-http-extension for PostgreSQL to link and pull data from REST-APIs into the database

- Configure PostgREST to serve the combined schema as a JSON-API.

- Create Semgrep rules for checking the data for any issues or errors.

3.2 Setting up PostgreSQL to combine data

PostgreSQL has a multitude of possibilities that enable you to integrate data from outside sources into your system:

- The http extension pgsql-http can be used to call arbitrary HTTP endpoints from inside PostgreSQL, allowing you to write views or functions which include HTTP responses.

- COPY FROM can be used to read files or run executables and process the output using SQL.

- Foreign data wrappers can be used to access data from other databases or systems as if they were part of your local database. This can be done using various protocols such as ODBC or JDBC, but also be scripted with Python. Unfortunately, the most useful foreign data wrapper multicorn is not yet compatible with PostgreSQL 12 and it is difficult to install, so I decided to use the pgsql-http extensions for this tutorial.

3.2.1 Configuration steps

Install the http extension with pgxn

pgxn install http

Create a database

createdb business_information_server

Log into the database:

psql business_information_server

Create the schema

You need to add a new schema called api which will contain all the views and tables that will later be exposed in the REST-API for rule-checking.

create schema api;

Create a view using the http-extension to pull data

Add the http extension that you installed and use it to run a GET request against the example-API: https://jsonplaceholder.typicode.com/users. The response will be used to create a view called user in the api-schema.

create extension if not exists http;

create view api.user as

with example_response as (

select json_array_elements(content::json) as user_json

from http_get('http://jsonplaceholder.typicode.com/users')

)

select (user_json->>'id')::int as id,

user_json->>'name' as name,

user_json->>'username' as username,

user_json->>'email' as email,

user_json->'address' as address,

user_json->>'phone' as phone,

user_json->>'website' as website,

user_json->'company' as company

from example_response;

If you select from this view, we already see the data in the system:

\x

select * from api.user;

Output:

-[ RECORD 1 ]--------------------------------------------------------------

id | 1

name | Leanne Graham

username | Bret

email | [email protected]

address | { +

| "street": "Kulas Light", +

| "suite": "Apt. 556", +

| "city": "Gwenborough", +

| "zipcode": "92998-3874", +

| "geo": { +

| "lat": "-37.3159", +

| "lng": "81.1496" +

| } +

| }

phone | 1-770-736-8031 x56442

website | hildegard.org

company | { +

| "name": "Romaguera-Crona", +

| "catchPhrase": "Multi-layered client-server neural-net", +

| "bs": "harness real-time e-markets" +

| }

3.3 Setting up PostgREST to serve the JSON API

Now that the view is set up, you can use PostgREST to expose the data as a JSON-API. PostgREST is a web server that inspects the schema of a PostgreSQL database and automatically generates a RESTful API. If you want to change the API you only need to change the SQL views and the server will adapt.

3.3.1 Configuration Steps

Create authentication roles for PostgREST

It's necessary to add a role in the database to allow the PostgREST server to connect to the database and use the api-schema it should serve. A so-called authenticator role is required as well. You can read the details about this in the manual: PostgREST authentication

-- the role used by the users

create role anonymous nologin;

grant usage on schema api to anonymous;

grant execute on function http to anonymous;

grant select on api.user to anonymous;

-- the role used to connect and switch to anonymous role

create role authenticator noinherit login password 'mysecretpassword';

grant anonymous to authenticator;

Create postgrest.conf file

The configuration of PostgREST is simple. All that is necessary is to configure the PostgreSQL database it should connect to and select the schema it should expose. This is done by creating a configuration file postgrest.conf:

db-uri = "postgres://authenticator:mysecretpassword@localhost:5432/business_information_server"

db-schemas = "api"

db-anon-role = "anonymous"

Start PostgREST-server

The server can then be started as follows. It will listen on port 3000, connect to the PostgreSQL database and serve the schema via REST.

postgrest postgrest.conf

Test the API

You can test the PostgREST-API by sending HTTP requests to it. You can use the excellent filtering capabilities to extract various aspects of the information you are interested in:

- Get all users: http://localhost:3000/user

- Get only the user with id=1: http://localhost:3000/user?id=eq.1

- Get the names of all users: http://localhost:3000/user?select=name

- Get the ids of all users whose email addresses end with '.biz': http://localhost:3000/user?select=id,email&email=like.*biz

3.4 Write a Semgrep rule to check for problems in the data

Analyzing code or data for patterns is traditionally done using either regular expressions or heavyweight tools like SonarQube. While regular expressions can be easily prototyped and run, they tend to get very complex if you need to match slight variations of patterns. Tools like SonarQube allow you to find complex patterns, but they require in-depth knowledge about language parsing and plugin development and the resulting plugin is usually not very readable.

A relatively new tool called semgrep aims to bridge this divide. Semgrep stands for semantic grep, highlighting its position between low-level "grep", a commonplace Unix tool that can find regular expressions, and the high-level frameworks mentioned above.

While grep can only match regular expressions, Semgrep understands the semantics of its supported languages and leverages this knowledge to make writing patterns a lot easier. Examples:

- It ignores variations in whitespace or comments as long as they don't matter to the semantics of the code.

- It knows that the order of keys in a JSON object does not matter, so if you are to write a pattern matching two keys, it will match these keys appearing in either order.

- In imperative languages like Java, it knows about the assignment of variables, so when looking for a value it does not care if whether you have shuffled that value around in various assignments.

Using regular expressions only, it would be complex or impossible to express these variations. Using a syntax-parsing framework would require writing and compiling a plugin. With Semgrep, it is possible to write an expression that supports this in a few simple lines.

3.4.1 Configuration Steps

Write a simple rule

Semgrep rules are written in YAML format. Here's a rule to check for usernames that contain an underscore. The rule will match any JSON object that contains a "username" key with a value that contains an underscore, no matter how deeply nested it will appear.

Save the following snippet to the file my_rules.yaml:

rules:

- id: no_underscores_in_usernames

languages: [json]

message: "Usernames should not contain an underscore: $USERNAME"

severity: INFO

patterns:

- pattern: |

{

"username": "$USERNAME",

...

}

- metavariable-regex:

metavariable: $USERNAME

regex: ".*_.*"

The "..." beneath the username key in the JSON object is necessary, because there might be more keys in the JSON object that is matched. If you omit this, Semgrep will only find objects containing a single key.

Run Semgrep against the business information server

You can now run Semgrep to check the sample API for issues:

curl http://localhost:3000/user > information.json && semgrep scan --config ./my_rules.yaml information.json

Semgrep will output a report of the issues it found, including the file name, the line number, and a description of the issue. The output looks like this:

...

┌─────────────────┐

│ 2 Code Findings │

└─────────────────┘

information.json

no_underscores_in_usernames

Usernames should not contain an underscore: Leopoldo_Corkery

71┆ {"id":6,"name":"Mrs. Dennis ...

...

Using the --json flag in semgrep, this report can be retrieved as json as well for further processing:

curl http://localhost:3000/user > information.json && semgrep scan --json --config ./my_rules.yaml information.json

4. Generalizing the concept of checking JSON descriptions of your data

Semgrep understands many more languages and their intricacies, but for the purpose of this article, I will focus on writing assertions for patterns in declarative languages like YAML or JSON.

4.1 Checking single files with Semgrep

A widely used declarative format is the OpenAPI specification. Semgrep rules can be used to find patterns you want to find in your APIs, like:

- Combinations of path and return code that you want to phase out

- Requests that use GET with a request-body (which is not valid according to the HTTP-specification)

- Naming of your API-endpoints

4.2 Combine and shape data from multiple sources for semgrep

If you want to check patterns across more APIs, you can use the business information server. Your declarative data-checking is not limited to single files, instead, you can combine and preprocess your data in a way that makes it convenient to write the rules you need.

You can for example concatenate the OpenAPI-specifications of all your microservices into one big JSON document and check them for inconsistencies or duplicates:

{

"api1": {

"openapi": "3.1.0",

"info": {

"title": "Example",

"version": "1.0.0"

},

"paths": {

"path1": {}

}

},

"api2": {

"openapi": "3.1.0",

"info": {

"title": "Example",

"version": "1.0.0"

},

"paths": {

"path2": {}

}

}

}

The steps for this are easy:

- Pull the OpenAPI from some endpoints using the

http-extension. - Use the PostgreSQL json functions and SQL to create a unified view.

- Done. PostgREST will automatically serve your view, and you can focus on writing rules.

5. Summary and outlook

My approach is not limited to OpenAPI. Any data that can be transformed into JSON or YAML can be checked using only SQL, standard rules, and this standard pipeline. Semgrep is of course able to match much more complex patterns than the examples above. You can check whether a match is inside some context, apply boolean logic, run math on expressions, and much more.

I will end this article with a short collection of ideas on business information you could pull together and validate. If you have more ideas please write me an email and let me know.

- In ERP systems that support complex product configuration, check for combinations you want to avoid, missing or incorrect prices or descriptions.

- Check Kubernetes declarations for patterns that should be phased out.

- Use Atlassians Confluence-API to check whether wiki-pages are present or named according to your conventions.

- Check server configuration for outdated dependencies or hardcoded credentials.

- Check customer profiles for sensitive information such as credit card or social security numbers.

- Check sales data for discrepancies or irregularities in sales figures.

- Check log files for suspicious activities.

The possibilities are virtually endless and none of these examples require much development. All that is needed is standard software and a bit of SQL.